If you’ve been on social media this week, you’ve likely seen the viral video of identical twins facing a hiccup at the Mumbai Airport gates. It’s been dubbed the “Sita-Gita” moment for Digi Yatra.

Though would have preferred “Luv-Kush” moment as they were confirmed twins as per Valmiki Ramayana, however as with anything else Social-Media (and Media) leaves you with not much choice, so will stick with Sita-Gita moment for now

Let us confess: It was funny, it was relatable, and yes-it was a friction point. While some sensational headlines called it a “glitch” or a “failure,” few users in the comments and separate (yes not so viral posts) nailed the engineering reality: “The system is doing the right thing by flagging it.”

They were absolutely right and …this detailed article was long due. I thought I’d share why something that sounds so simple and obvious is actually a deliberate choice we made to protect your privacy. If you care (which you do at least about your privacy), please read on –

The Global Reality: It’s Not Just Twins, first let’s zoom out. This isn’t just a Digi Yatra issue; it’s a physics issue. From the Smart Gates anywhere to Aadhaar onboarding to the Face biometric on your Phone, every biometric system in the world battles the same fundamental constraints. Facial verification relies on mapping unique landmarks (nodal points) on your face (recall that quintessential face image used to depict facial scan – with connected lines all around!!)

Unfortunately, the system doesn’t care how you look – for algorithms, we all are just a mathematical formula

When the real world messes with those maps, the confidence score drops. Apart from the “Twin Paradox,” there are a few more known limitations that every engineer globally is managing daily:

The Lighting Trap: Extreme backlighting or harsh neon glare washes out depth.

The “Disguise”: Masks, chunky sunglasses, or hats cover the very landmarks the math relies on.

Big Emotions: Extreme joy, anger, or surprise temporarily warps facial geometry.

Biological Drift: A new beard (only if compromising with the face landmarks), significant aging, or injury can make you look different from your reference image.

The Angle: The camera needs a frontal view. If you are looking at your watch, talking on a phone, clicking while lying down the system sees a profile, not a clear frontal face.

Environmental Noise: Fog, rain, clicking while driving in a car or a crowded background introduces “noise.”

Training Bias: Algorithms are only as good as their training data. No brainer here – even we humans are as good (or bad) as our, if we can say training … (not saying education is deliberate!)

In aviation security, we manage two risks: False Rejection (stopping the right person) vs. False Acceptance (letting an imposter in). We default to “Stop” rather than risk a security breach.

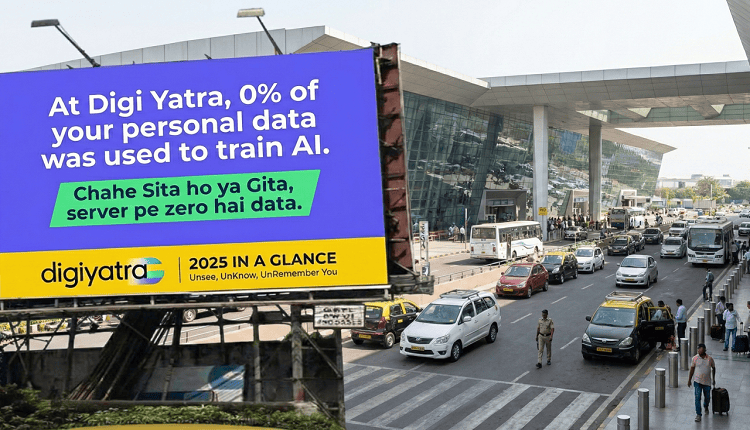

The “Learning” Dilemma: Why Our AI Doesn’t “Get Smarter” From Your Data This brings me to a critical point raised by many experts online: “Why doesn’t the model train on these edge cases to get better over time?”

Here is the hard truth: We intentionally prevent the AI from learning from you.

In a standard AI company, when a model fails, engineers grab that failed image, label it, and feed it back into the system to “retrain” the model. We cannot do that.

Because Digi Yatra operates on a Zero-Data Architecture:

We do not store your face (forget face, we store nothing – we do not have storage)

We do not store your “failed scan” images.

We do not create a database of Indian faces to train algorithms.

To train the AI, we would need to hoard your data. We refused to do that. We rely on pre-trained, globally benchmarked models rather than using our passengers as guinea pigs for data collection.

If you have a model trained to recognize Sita – Gita (or Luv – Kush), we would love to hear from you.

The “Master Switch” Myth: One App, Many Airports Another common question is: “If there is a fix, why isn’t it live everywhere yet?”

There is a misconception that Digi Yatra is a centralized cloud where we push code once, and it updates everywhere instantly. It is not.

Digi Yatra is a decentralized ecosystem.

Local Infra: Each airport deploys and operates its own local infrastructure, servers, and Facial Algorithms.

Local Brains: The facial verification system at Airport A operates independently from Airport B.

No Single Point of Failure: This is a deliberate design to ensure smooth integration of Digi Yatra with existing airport systems and above all to support decentralization, scale and stability for population scale system.

The trade-off? Updates are not instant. We cannot simply flip a master switch at HQ. We have to work with each airport operator individually to upgrade their specific local stack. That is why you see features (and fixes) rolling out in phases rather than all at once.

The “Enrollment Check” Approach – And Why We Can’t Use It Similarly, you asked: “Just check for duplicates when they sign up!”

Technically? Easy. Privately? Impossible.

To run a “duplicate check” at enrollment, we would need to compare your face against everyone else’s. That would require a massive central biometric database of millions of users. Who needs another biometric database? We asked. Your biometrics stay only on your phone. We cannot check if “User A” is a twin of “User B” because we don’t hold the data for either of them.

The “More Data” Trap suggestions: “Use Retina scans! Use Gait analysis!

To get this extra precision, we’d need to capture more sensitive biological data. While that solves the math, it hurts our privacy first philosophy. We are committed to Data Minimization. We process the bare minimum needed to get you through the gate.

And yes, we have been doing this responsibly since launch in 2022 – we were not waiting for DPDPA to enforce that. Privacy was never an afterthought. We are Responsible by Design.

Where We Stand Now: The Workflow Fix So, do twins just have to wait in the manual line? Absolutely not. The solution isn’t “more invasive tech.” It’s better workflow.

We are requesting airport teams to implement 1:1 Fallback Mechanism:

The Glitch (1:N): The Facial engine identifies twins and flags it.

The Fix (1:1): You scan your Boarding Pass first.

The Result: The system now only has to answer one question: “Is this the same person who owns this Boarding Pass?”

This effectively eliminates the “Twin Paradox” because the search space shrinks from “Everyone” to “Just You.” This logic is already live at Delhi and Hyderabad entry gates and is being standardized across the network.

The Bottom Line: We are building this plane while flying it. The “Sita-Gita” moment wasn’t just a meme; it was a stress test for our philosophy. We could fix the “Twin Problem” tomorrow by building a central database or training AI on your photos. But we won’t. We will fix it by building smarter, privacy-preserving workflows instead.

To the twins (Prashant, his brother and others) and everyone else testing our edge cases: thanks for keeping us on our toes. You keep finding the limits, and we’ll keep refining the system

Even the most advanced autopilot occasionally needs a manual override. We are just working to ensure that when the system hands the controls back to you (to scan that boarding pass), it feels less like a glitch and more like a safety feature.

At the end of the day, true intelligence isn’t just about recognizing every face-it’s about knowing when to stop guessing AND maybe ask for a boarding pass instead!!

Happy Flying! ✈️